GPT-4o for scoring the Rey-Osterrieth Complex Figure

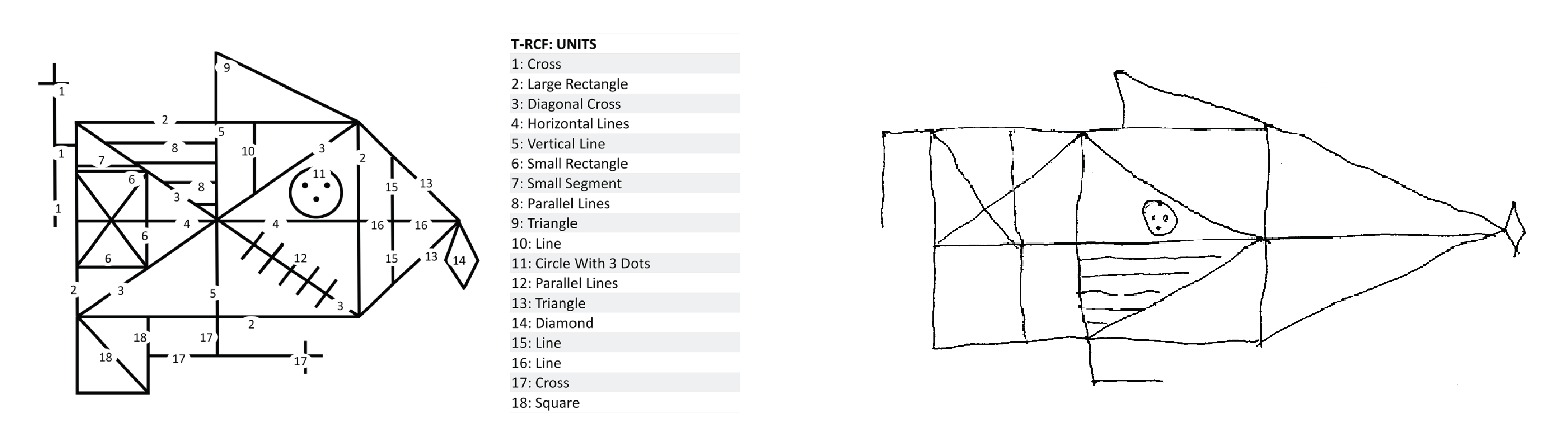

Last week, I started playing with the new GPT-4o, the latest multimodal model from OpenAI, that can reason across audio, vision, and text. This model is not yet available on UCSF Versa, so we can’t use it with real patient’s data. In the example below, I gave the model the original Rey-Osterrieth Complex Figure with the scoring system, created a prompt with the instructions on how to score the task, and provided a random copy I found on the web.

I asked the model to produce:

- A description of the copy mentioning the structures

- A single number representing the copy’s final score.

Because LLMs are leaky abstractions (they produce non-deterministic outputs), I used a tool that parses the output in a way that it conforms to a predefined schema. Here is the model’s response:

Analysis of the Patient’s copy of the REY O Complex figure:

The patient’s drawing of the Rey-Osterrieth Complex Figure shows significant deficiencies in both completeness and organization. Several key structures are either missing or incorrectly placed. The cross (1), small rectangle (6), small segment (7), parallel lines (8), vertical line (10), parallel lines (12), lines (15 and 16), and the cross (17) are absent. The large rectangle (2), diagonal cross (3), horizontal lines (4), triangle (9), circle with 3 dots (11), and diamond (14) are present but inaccurately placed. The lines (5 and 13) are present and correctly placed. The overall structure lacks precision and coherence, reflecting the patient’s visuospatial constructional deficits.

Patient score: 10

It is far from perfect, but I’m quite impressed! If you want to see the full implementation and try yourself with some random copies (no patient’s data), I prepared a Google Colab Notebook. Let me know if you have any issues following the instructions. I can think about other potential applications related to automatically scoring old archived handwritten material.